[AINews] Titans: Learning to Memorize at Test Time • ButtondownTwitterTwitter

Chapters

Neural Memory at Test Time

AI Discord Recap

Examples of AI-related News and Discussions

Latent Space Discord

AI Model Performance and Usage Discussions

Interconnects (Nathan Lambert) Events

Discussions on Various AI Models and Optimizers

LM Studio: General Discussions

Eleuther Research Messages

GPU Mode - Cool Links (3 messages)

Interest in DSPy Examples and Shared Experiences

Neural Memory at Test Time

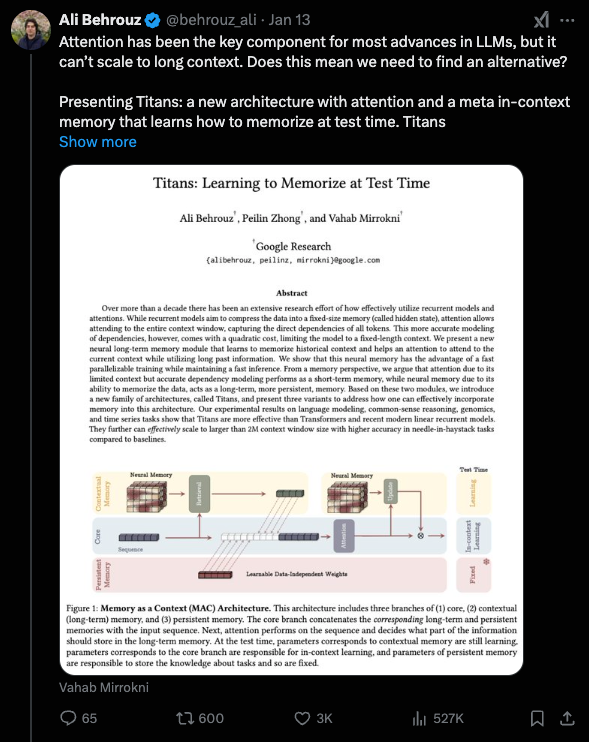

The latest Google paper introduces a new concept, dubbed 'Transformers 2.0,' which integrates neural memory into the architecture at 'test time' rather than separately. The paper employs a surprisal measure for memory updates and weight decay for modeling forgetting, resulting in improved context utilization over long contexts. The section also provides a Twitter recap on AI models, scaling, applications, tools, security concerns, ethical issues, geopolitics, regulations, education, hiring, software engineering, coding automation, politics, US policies, infrastructure, and light-hearted AI remarks.

AI Discord Recap

- Theme 1: AI Model Performance Stumbles Across Platforms

- Users reported issues with platforms like Perplexity, Cursor IDE, and DeepSeek, facing outages, slowdowns, and latency problems, respectively.

- Theme 2: New AI Models Break Context Barriers

- Innovations like MiniMax-01, Cohere, and Mistral’s FIM Marvel are extending context lengths and enhancing code completion capabilities.

- Theme 3: Legal Woes Hit AI Datasets and Developers

- Discussions include a DMCA takedown of the MATH dataset, a trademark dispute over JavaScript, raising concerns about AI dataset accessibility.

- Theme 4: Advancements and Debates in AI Training

- Topics cover advancements in models like Grokking, challenges of dynamic quantization, and exploits in TruthfulQA.

- Theme 5: Industry Moves Shake Up the AI Landscape

- Recent developments include Cursor AI's Series B funding, Anthropic's ISO accreditation, and NVIDIA Cosmos's impact showcased at CES.

Examples of AI-related News and Discussions

Users on various Discord channels engage in discussions related to recent developments and challenges in the AI and machine learning fields:<br>- Users debate built-in metrics for AI models and the potential risks of fake cryptocurrency.<br>- Hackathons and new AI projects like <strong>Xeno Grant</strong> and <strong>Ndea</strong> are highlighted.<br>- Technological advancements such as fault-tolerant chip designs by <strong>Cerebras</strong> are discussed.<br>- Concerns are raised over DMCA takedowns affecting datasets like <strong>MATH</strong>.<br>- New research papers, like <strong>MVoT</strong>, proposing innovative solutions for complex reasoning, are shared.<br>- Members share experiences and challenges with tools like <strong>OpenAI</strong>'s <strong>ChatGPT</strong> and <strong>GPT-4o</strong>.<br>- Discussions cover optimization techniques, model comparisons, and challenges like dealing with large datasets and memory limits.

Latent Space Discord

Latent Space Discord

- Cursor AI Scores Big Bucks: Cursor AI raised a new Series B funding round co-led by a16z to support its coding platform's next phase.

- Transformer² Adapts Like an Octopus: Sakana AI Labs introduced dynamic weight adjustments in their new paper, drawing a comparison to how an octopus blends with its surroundings.

- OpenBMB MiniCPM-o 2.6 Takes On Multimodality: The release of MiniCPM-o 2.6 showcases an 8B-parameter model that spans vision, speech, and language tasks on edge devices.

- Curator: Synthetic Data on Demand: The new Curator library offers an open-source approach to generating training and evaluation data for LLMs and RAG workflows.

- NVIDIA Cosmos Debuts at CES: NVIDIA Cosmos was presented at the LLM Paper Club following its CES launch, urging attendees to register for the session.

AI Model Performance and Usage Discussions

The section discusses various AI models, their performance, and user experiences shared by members on the platform. Users report concerns about slow output speed for llama-3.1-sonar-large-128k-online, leading to discussions on potential causes and troubleshooting steps. Additionally, topics like using the Command feature in Windsurf, Discord challenge winners, student discounts, and the launch of the Windsurf Editor are covered. There are also mentions of concerns regarding Codeium telemetry, subscription plans, and student discounts, along with challenges faced in utilizing remote repositories and Codeium installation. Furthermore, discussions on Windsurf IDE issues, discounts, user experiences with Cascade, C# variable type analysis, and the integration of AI models are highlighted. Lastly, details about running Unsloth with multiple GPUs, fine-tuning misconceptions, web scraping model recommendations, and challenges with Ollama compatibility are detailed in this part.

Interconnects (Nathan Lambert) Events

Mixer and Hackathon on Agent Identity:

- Join us for a Mixer on the 24th and a Hackathon on the 25th at Betaworks, NYC, focusing on agent identity with $5k in prizes for the most interesting projects.

- Food, drinks, and good vibes are included; check out the details on the event page.

Registration for Xeno Grant Hackathon:

- Registration is required for the Xeno Grant: Agent Identity Hackathon, which features $10,000 per agent—half in $YOUSIM and half in $USDC.

- The program spans 4 weeks for agents and their developers, with approvals necessary for participation.

Discussions on Various AI Models and Optimizers

This section covers discussions on various AI models and optimizers such as 4o-mini and Llama-8B models for original content, the grokking phenomenon with concerns about Softmax function, GrokAdamW and Ortho Grad compatibility, skepticism towards replacing Softmax function, and strategies for encouraging grokking phenomenon. Additionally, it includes topics like updating project titles in Stackblitz, challenges with the Grokfast optimizer, Coconut GitHub project for training large language models, and interesting links related to AI research. Lastly, it highlights user interactions with the Cohere Discord bot, issues related to Cohere API keys and performance, and the potential of the Cohere Toolkit in the community.

LM Studio: General Discussions

Exploring Fine-Tuning of Models:

A user is considering fine-tuning models with specific public domain texts of an author, improving output quality through directive prompts. They are transitioning to Google CoLab for fine-tuning, noting Python is new to them but expressing excitement about utilizing LLMs for writing.

Understanding User vs Assistant Mode:

User mode allows users to send messages as themselves, while Assistant mode lets them respond as the AI, helping control the conversation flow. Users clarified that in Assistant mode, their responses are perceived as AI-generated content, shaping the context of the interaction.

Managing Context Window Limitations:

Users discussed the 'context is 90.5% full' notification, which indicates how much of the context window is currently used in the model. Adjusting context size was suggested, but users were warned that larger context increases the memory footprint of the model.

Resolving Model Loading Problems:

Users shared experiences with loading models and troubleshooting errors related to high memory usage and system specifications. Discussions touched on potential solutions like adjusting model layers and settings to optimize performance on varying hardware.

Image Analysis Capabilities with LLMs:

Several users reported difficulties in getting models like QVQ-72B and Qwen2-VL-7B-Instruct to analyze images correctly. Ensuring that runtime environments are up to date was emphasized as crucial for successful image analysis functionality.

Eleuther Research Messages

A recent abstract introduced the concept of critical tokens which are crucial for reasoning tasks in LLMs and can significantly impact model accuracy, particularly in datasets like GSM8K and MATH500. Identifying and minimizing these tokens could enhance the model's performance, aligning with observations on implicit PRMs. Members discussed VinePPO clarifying the usage and noting it does not require example Chain-of-Thought (CoT) trajectories for implementation. New speed record set for NanoGPT with improvements like new lm_head biases and fused operations prompting discussions on further optimizations. Weaknesses in TruthfulQA dataset exploited to achieve a 79% accuracy highlighting concerns about dataset reliability. Concerns over human annotations in datasets like halueval were discussed, pointing out prevalent incorrect annotations leading to misleading results. The Hendrycks MATH dataset faced a DMCA takedown notice, while acknowledgment was given regarding AOPS as the source of questions. EleutherAI encountered issues such as NeoX model conversion struggles, missing 'module' attributes, intermediate size confusion, layer masking issues, and zero stages incompatibility in model parallelism. Cursor AI secured Series B funding, Transformer² introduced dynamic weight adjustments for tasks, AI tutoring in Nigeria showed remarkable gains, a new multimodal model OpenBMB MiniCPM-o 2.6 was released, and Curator was introduced as a tool for synthetic data generation. The Latent Space group discussed NVIDIA Cosmos launch at CES, while the GPU MODE channels delved into Triton dependencies on Torch, cuBLAS equivalents, TMA features on RTX 50x Blackwell cards, and Torch compiler details. Additionally, MiniMax models were launched with Lightning Attention mechanism, providing unprecedented ultra-long context processing and cost-effective AI solutions.

GPU Mode - Cool Links (3 messages)

Weight decay essential for bfloat16 training

A notable suggestion emphasized that weight decay should be used when training with bfloat16 to avoid divergence, as highlighted in Fig 8 of the paper.

- This practical advice aims to enhance the performance of models utilizing bfloat16.

A100 Animation Gains Attention

A shared tweet noted that everyone working with GPUs needs to internalize the insights from a brilliant animation of the A100 GPU by @vrushankdes.

- The animation sparked interest and discussion on GPU capabilities and optimizations, with a link here.

Questions on GPT-3 Architecture Access

A member questioned how vendors participating in MLPerf access the architecture and weights of GPT-3, given that they are not open-sourced.

- This raised discussions around the accessibility and proprietary nature of model architectures in the competitive landscape of machine learning.

Interest in DSPy Examples and Shared Experiences

A member expressed interest in concrete DSPy examples related to ambient agents, indicating a broader curiosity within the community on the practical implementations of ambient agents. The community also sought shared examples of experiences or implementations to standardize approaches and leverage insights from those who have already worked on similar projects.

FAQ

Q: What is the concept of 'Transformers 2.0' in the latest Google paper?

A: Transformers 2.0 integrates neural memory into the architecture at 'test time' rather than separately, employing a surprisal measure for memory updates and weight decay for modeling forgetting.

Q: What are some of the key themes discussed in the essai related to AI models and developments?

A: The essai covers themes like AI model performance issues, breakthroughs in context capabilities, legal challenges in AI datasets, advancements in AI training models, and industry developments shaking up the AI landscape.

Q: How do users on Discord channels engage in discussions related to AI and machine learning fields?

A: Users on Discord discuss topics such as built-in metrics for AI models, risks of fake cryptocurrency, new AI projects like Xeno Grant and Ndea, technological advancements by companies like Cerebras, and issues with datasets and AI models.

Q: What are some of the challenges and advancements discussed regarding fine-tuning AI models?

A: The users are exploring fine-tuning models with public domain texts, transitioning to Google CoLab, aiming to improve output quality through directive prompts. Discussions also include managing context window limitations, model loading problems, and image analysis capabilities with LLMs.

Q: What advice is given regarding weight decay and training with bfloat16?

A: The essai suggests that weight decay should be used when training with bfloat16 to avoid divergence, enhancing the performance of models utilizing this setup.

Q: What discussions have arisen around GPT-3 architecture access?

A: There are questions surrounding how vendors participating in MLPerf access the architecture and weights of GPT-3, given their proprietary nature, sparking debates on the accessibility and competitive landscape of machine learning architectures.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!