[AINews] Talaria: Apple's new MLOps Superweapon • ButtondownTwitterTwitter

Chapters

Apple's New MLOps Superweapon

AI Twitter Recap

AI Community and Event Highlights

OpenInterpreter Discord

LangChain AI Discord

Perplexity AI General

Perplexity AI ▷ #

LLM Finetuning (Hamel + Dan): Group Discussions

LLM Finetuning: Capelle Experimentation

Nous Research AI - Unusual Breakfast Choices, GPU Setups, and AI Innovations

Unsloth AI Discussion

CUDA MODE Discussions

CUDA Mode Discussions

HuggingFace Diffusion Discussions

LM Studio Chat Discussions

Discussion on OpenAI GPT-4 and its Challenges

Modular (Mojo 🔥) Updates

OpenInterpreter AI Content

Discussing LAION, Cohere, and LlamaIndex

OpenRouter & LlamaIndex Updates

LangChain AI General Discussions

AI Town Dev Discussions

Apple's New MLOps Superweapon

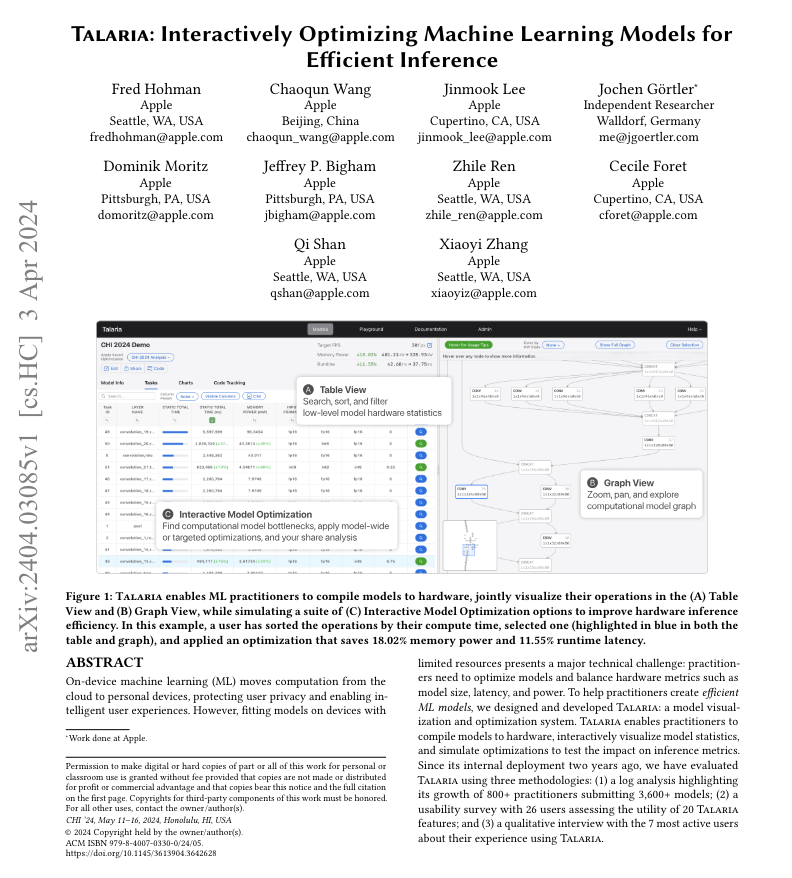

Apple introduced Apple Intelligence, a game-changing MLOps model that reportedly surpasses Google Gemma, Microsoft Phi, and others. The model includes an on-device version with innovative quantization strategies and high-performance capabilities. Talaria, a key tool for on-device inference, enables quick iteration and performance tracking for various architectures. Apple's focus on practical performance rather than academic competition may be its key to success in the AI field.

AI Twitter Recap

Andrej Karpathy's New YouTube Video on Reproducing GPT-2 (124M)

- Karpathy released a new YouTube video titled 'Let's reproduce GPT-2 (124M)' featuring a comprehensive 4-hour video lecture on building, optimizing, training, and evaluating the GPT-2 model.

- The video covers exploring GPT-2 checkpoint, implementing GPT-2 nn.Module, utilizing techniques like mixed precision, flash attention, setting hyperparameters, and achieving close to GPT-3 (124M) performance.

- An associated GitHub repo contains the full commit history for code changes.

Apple's WWDC AI Announcements

- Apple's WWDC lacked significant AI announcements that impressed observers.

- Speculations about 'Apple Intelligence' and partnership with OpenAI were not confirmed during the event.

Intuitive Explanation of Matrix Multiplication

- Thread on Twitter explaining matrix multiplication's importance in modern machine learning with step-by-step breakdown and visualizations.

Apple's Ferret-UI: Multimodal Vision-Language Model for iOS

- Discussion of Apple's Ferret-UI, a vision-language model for iOS analyzing icons, widgets, and text relationships with potential application as an on-device AI assistant.

AI Investment and Progress

- Over $100B spent on NVIDIA GPUs since GPT-4 training in fall 2022, questioning if AI model advancements will match the investment.

- Concerns over hitting a data wall potentially slowing AI progress, industry divided on short-term impediment versus a meaningful plateau.

Perplexity as Top Referral Source for Publishers

- Perplexity driving traffic to publishers, being a top referrer for several publishers.

- Perplexity working on new engagement products and aligning long-term incentives with media companies.

Yann LeCun's Thoughts on Managing AI Research Labs

- Importance of reputable scientists in research lab management to identify talent, provide freedom and resources, set research directions, inspire goals, and evaluate individuals beyond traditional metrics.

- Emphasis on fostering intellectual weirdness and accommodating truly creative individuals.

Reasoning Abilities vs. Storing and Retrieving Facts

- Distinguishing reasoning abilities from memorization skills, recognizing common sense and reasoning as separate from storing and retrieving facts.

AI Community and Event Highlights

This section highlights the latest announcements and events in the AI community. It includes new speakers and sold-out tickets at the AI Engineer World’s Fair, intriguing projects like Websim.ai’s recursive exploration, the release of the ICLR 2024 podcast Part 2, and discussions on multilingual transcription, security, API token management, performance analysis, and fine-tuning techniques. The technical innovations and discussions cover a wide range of topics such as leveraging CUDA profiling for performance optimization, structured concurrency in programming languages like Mojo, and updates from various discord channels like Stability.ai, Perplexity AI, LLM Finetuning, Nous Research AI, Unsloth AI, CUDA MODE, HuggingFace, and LM Studio.

OpenInterpreter Discord

Gorilla OpenFunctions v2 Matches GPT-4

Community members are discussing the capabilities of Gorilla OpenFunctions v2 and its impressive performance in generating executable API calls. Meanwhile, Local II has announced support for a local OS mode, allowing for potential live demos. Users have reported various issues with OI models, including API key errors and problems with vision models like moondream. A breakthrough has been reached with the integration of Open Interpreter and iPhone's Siri, enabling voice commands to execute terminal functions. Attempts to run OI on Raspberry Pi have encountered resource issues, sparking determination to find solutions and a broader desire for cross-platform support.

LangChain AI Discord

Markdown Misery and Missing Methods:

Engineers reported issues with processing a 25MB markdown file in LangChain, as well as difficulties with using create_tagging_chain() due to ignored prompts. These issues suggest potential bugs or gaps in documentation.

Secure Your Datasets with LangChain and Bagel:

LangChain's integration with Bagel aims to offer secure dataset management, potentially enhancing infrastructure for data-intensive applications.

Document Dilemmas:

Discussions focused on document loading and splitting for LangChain use, highlighting the need for technical finesse in handling various document types for optimized language model performance.

API Ambiguities:

A query was raised regarding the use of api_handler() in LangServe without add_route(), specifically to implement playground_type="default" or "chat" without clear guidance.

AI Innovations Invite Input:

Community members were invited to beta test the new research assistant, Rubik's AI, which offers access to models like GPT-4 Turbo. Various community projects were also highlighted, showcasing a robust development and testing environment.

Perplexity AI General

This section discusses various topics related to Perplexity AI in a Discord channel with 905 messages. Users express frustration with AI travel planning, enhancements in Perplexity AI's search features, issues with Perplexity Pages indexing, debates over GPT-4 models, and warnings against the Rabbit R1 device. Additionally, links to news about a Buzzy AI search engine's controversial practices, supported models, North Korea sending trash balloons, and various GIF links are mentioned.

Perplexity AI ▷ #

This section provides updates and discussions from the Perplexity AI community. Users inquire about various topics such as language model generation rules, rescuing hostages, AI helpers, the GTD method, Starliner spacecraft success, and the new Apple Intelligence system. There are also discussions on integrating external web search with GPT, along with inquiries and solutions related to API credits, model fine-tuning, and personal productivity systems. The section offers a glimpse into the diverse and dynamic conversations within the Perplexity AI community.

LLM Finetuning (Hamel + Dan): Group Discussions

LLM Finetuning (Hamel + Dan) Group Discussions

- Text-to-SQL Benchmarks Emphasize GroupBy but Miss Filter/WHERE Clauses: Benchmark focus tends to be on GroupBy cases rather than high cardinality columns in Filter/WHERE clauses. Different results noted for querying AWS vs. Amazon Simple Storage Service based on filter conditions.

- Navigating prompt templates and Pydantic models: Members seek clarity on prompt-based templates and where Pydantic models fit, especially in chat models.

- Lectures hailed as excellent: User appreciates quality of recent lectures, emphasizes multiple viewings and practical implementation.

- Replicate's vLLM Model Builder now found: User finds vLLM Model Builder in Replicate's UI and shares GitHub link. Workshop recordings and extra credits on Modal platform also discussed.

- Hierarchical Pooling Added to ColBERT: Pull request for ColBERT supports optional hierarchical pooling. Discussions delve into LLMs, RAG techniques, and need for integration methods information.

- Fused vs Paged Optimizers Debate: Members discuss benefits of fused vs paged optimizers and experiences with adamw_bnb_8bit. Other topics include entity extraction, category metadata in product recommendations, and exploring custom embedding models for classification.

LLM Finetuning: Capelle Experimentation

Free Intro to Weave

A member shared a notebook link to learn the basics of Weave, useful for tracking function calls, publishing and retrieving versioned objects, and evaluating with a simple API.

Quick Course on W&B

A 10-minute video course on W&B was shared to help users discover essential features of Weights & Biases, enhance machine learning productivity, and learn integration with Python scripts.

Join Inspect_Ai Collaboration

Nous Research AI - Unusual Breakfast Choices, GPU Setups, and AI Innovations

- Fermented Fireweed Tea for Breakfast: Unique breakfast choices shared by members, including fermented fireweed tea and other items.

- Complexities of Using Mixed GPUs: Discussion on the challenges of having different GPUs in a machine learning rig.

- σ-GPT generates sequences dynamically: Insights on σ-GPT's ability to generate sequences in any order at inference time.

- Extracting Concepts from GPT-4: Comparison between OpenAI's blog on extracting concepts from GPT-4 and a publication by Anthropic.

- Krita Plugin Recommended for Outpainting: Recommendation of a Krita stable diffusion plugin for outpainting.

- Insane Performance of 72b Model: Comparisons of the 72b model with GPT-4 in terms of mathematical and physical reasoning capabilities.

- Experiment on Layer Pruning Strategies: Community discussion on pruning strategies for models like Llama 3 70b and Qwen 2 72b.

- Concerns Over GPU Cloud Costs and Resources: Sharing of resources for affordable GPU cloud services and challenges of hosting large models.

- Legal and Ethical Discussion on AI Regulation: Debate sparked by a tweet discussing AI regulation's potential impact on innovation.

- HippoRAG and Raptor: The Future of Clustering: Highlighting clustering over knowledge graphs for efficient language model training.

- Schema Debates for RAG: Discussions on JSON schemas for model data input and output.

- Ditto and Dynamic Prompt Optimization (DPO): Delving into Ditto's potential with online comparison and iterative alignment.

- Standardizing Multi-Metric Outputs: Debates on including metrics like relevance and sentiment directly into datasets.

- Cohere's Retrieval and Citation Mechanism: Examination of Cohere's retrieval system and handling of citations.

- Recursive AI visualization stuns: Progress in visualizing recursive AI without revealed details.

- Command line copy-paste bug squashed: Resolution of issues copying and pasting text from the command line interface.

Unsloth AI Discussion

Unsloth AI (Daniel Han) Discussion

- ETDisco debates QLoRA vs DoRA: Differences between QLoRA, DoRA, and QDoRA were discussed, highlighting their unique features.

- Model Arithmetic Tip: Fine-tuning technique involving weights from L3 base and L3 instruct was shared, leading to a conversation on model merging nuances.

- Finetune Codegemma Redesign: Feedback was sought on a graphic design for Codegemma finetune, with detailed suggestions given for improvement.

For more detailed information about the topics discussed, visit the respective links provided.

CUDA MODE Discussions

- FlagGems Sparks Interest: Discussion on a project called FlagGems in the Triton Language.

- General Kernel Dimensions Query: Users seeking advice on handling general kernels without fixed dimensions in Triton.

- State-of-the-Art Triton Kernels: Inquiry about resources for advanced Triton kernels with solutions.

- BLOCK_SIZE and Chunking in Triton: Clarification on handling arbitrary Block Sizes in Triton.

- CUDA MODE Discussions: Detailed discussions on FPGA costs, mixed precision formats, Torch.compile configuration, Matmul templates, and benchmarking.

CUDA Mode Discussions

Bit-Level Trickery:

- Unique bit representation method using 0 = 11, -1 = 01, and 1 = 00 for efficient operations.

- Potential bug identified.

FPGA Costs vs. A6000 ADA:

- Cost-effectiveness of custom FPGAs questioned; A6000 ADA GPUs suggested.

- Highlighted Bitblas' 2-bit kernel speed-ups.

NVIDIA Cutlass and Bit-Packing:

- Exploring Cutlass library's support for nbit bit-packing with uint8 formats.

- Shared links to relevant documentation and GitHub repositories.

Collaboration Meeting Scheduled:

- Meeting set up to discuss projects using BitBlas and other kernels.

- Focus on PR and documentation updates.

- Shared GitHub links and meeting times.

BitBlas Benchmarks and Insights:

- Benchmark results comparing BitBlas to PyTorch's matmul fp16.

- Noted speed differences with 4-bit and 2-bit operations.

- Highlighted varied performance based on input size and batch-size.

HuggingFace Diffusion Discussions

Several discussions took place in the HuggingFace diffusion channel on different topics related to training models and utilizing specific tools. Users asked questions about training with conditional UNet2D models, imprinting text into images using SDXL, calculating MFU during training, and the differences between HuggingFace scripts and custom notebooks for finetuning SDXL models. Links to relevant resources and tools were shared for further reference and exploration.

LM Studio Chat Discussions

LM Studio ▷ #🤖-models-discussion-chat (34 messages🔥):

- Users humorously discuss obfuscation in code and the unobfuscation capabilities of LLM.

- The VNTL Leaderboard ranks LLMs based on their translation abilities of Japanese Visual Novels.

- Users share their experiences with Gemini Nano models and stable diffusion for image editing.

- A user successfully merges two models and seeks advice on using the alpaca chat template.

LM Studio ▷ #🧠-feedback (13 messages🔥):

- Users discuss limitations and feedback on LM Studio, including the need for stop strings functionality and concerns about closed-source nature.

- A user shares that disabling the mmap flag reduces memory usage in LM Studio.

LM Studio ▷ #📝-prompts-discussion-chat (1 messages):

- Members discuss the importance of giving clear, positive instructions for better results with AI models.

LM Studio ▷ #⚙-configs-discussion (4 messages):

- Users resolve miscommunications regarding function calling and discuss issues with NVIDIA GT 1030 compatibility.

LM Studio ▷ #🎛-hardware-discussion (228 messages🔥🔥):

- Users share challenges and solutions related to cooling Tesla P40 GPUs, handling multi-GPU setups, and optimizing hardware for AI tasks.

LM Studio ▷ #🧪-beta-releases-chat (2 messages):

- Members anticipate improvements for Smaug models and inquire about collecting LMS data to external servers.

LM Studio ▷ #autogen (5 messages):

- Members share solutions for issues with the Autogen app, mixed results with different models, and seek advice on fine-tuning models.

LM Studio ▷ #langchain (13 messages🔥):

- Users discuss using llama3 for instruction following and experimenting with OpenAI integration and Mistral models.

LM Studio ▷ #amd-rocm-tech-preview (15 messages🔥):

- Discussions revolve around upgrading AMD systems, GPU isolation techniques, and exploring stable.cpp and Zluda for AMD GPU usage.

LM Studio ▷ #🛠-dev-chat (1 messages):

- A new member seeks GPU configuration support for an old GT Nvidia 1030 GPU in LM Studio.

OpenAI ▷ #announcements (1 messages):

- OpenAI partners with Apple to integrate ChatGPT into iOS, iPadOS, and macOS.

OpenAI ▷ #ai-discussions (216 messages🔥🔥):

- Topics include concerns about Whisper's multilingual transcription, Apple's 'Apple Intelligence', security concerns with OpenAI API prompts, challenges with image generation services, and AI model integrations in consumer tech.

Discussion on OpenAI GPT-4 and its Challenges

The discussion highlighted various challenges faced with OpenAI's GPT-4 model. Members expressed frustration with GPT agents being stuck with GPT-4o instead of GPT-4, leading to poor performance in structured prompts. Concerns were raised about the high costs associated with token limits, particularly in the 128k context call. Additionally, debates arose regarding image tokenization costs and how images are resized for tokenization purposes. Confusion prevailed regarding the privacy and external integration of custom GPTs, clarifying that custom GPTs are private by default and cannot be integrated externally. Furthermore, delays in the rollout of new voice modes for Plus users were questioned, with the ambiguous timeline of 'coming weeks' noted humorously. Overall, the conversation shed light on the challenges and limitations encountered while working with GPT-4 and its functionalities.

Modular (Mojo 🔥) Updates

Installing MAX on MacOS Requires Manual Fixes:

Users encountered issues installing MAX on MacOS 14.5 Sonoma and provided solutions using Python 3.11 with pyenv.

Structured Concurrency vs. Function Coloring Debate:

Discussion on structured concurrency vs. function coloring, with members sharing opinions on complexity and performance.

Concurrency in Programming Languages:

Conversation covering concurrency primitives and the strengths of Erlang/Elixir, Go, and async/await mechanisms.

MLIR and Mojo:

Discussion on the relevance of MLIR dialects in Mojo's async operations and the mention of async dialect in MLIR’s docs.

Funding and Viability of New Programming Languages:

Dialogue about the financial backing required for developing new programming languages, with comparisons to Rust and Zig teams.

OpenInterpreter AI Content

The section discusses various interactions and conversations within the OpenInterpreter community related to technical issues and challenges faced by users. These include inquiries about scenario outputs, struggles with setup on different devices like Raspberry Pi, and requests for installation tutorials. The community also shares experiences with connecting devices and seeking solutions to various technical difficulties.

Discussing LAION, Cohere, and LlamaIndex

In the AI communities of LAION, Cohere, and LlamaIndex various topics were discussed. In LAION, discussions included dynamic sequence generation with σ-GPT, alternative to autoregressive models, transformer embedding analysis, prompt-based reasoning challenges, and condition embedding perturbation testing. Cohere members explored the platform's versatility, in-character roleplay with AI, community member introductions, project and career aspirations, and encouragement from the community. In LlamaIndex, integrations for data analysis and enhancements, building agentic RAG systems, query rewriting for enhanced RAG, and creating a voicebot for customer service were discussed.

OpenRouter & LlamaIndex Updates

This section provides updates on various services and models related to OpenRouter and LlamaIndex. These updates include the launch of new models like Qwen 2 72B Instruct, Dolphin 2.9.2 Mixtral 8x22B, and StarCoder2 15B Instruct by OpenRouter. Additionally, discussions on using various AI models for different tasks, creating datasets for model training, handling API calls, and optimizing content quality and structure are covered. Users also shared their experiences with products like the Daylight Computer and the challenges of early adoption. Nathan Lambert also shared his farewell message as he leaves the Bay Area, and sought tutorials on language modeling for a proposal submission to NuerIPs.

LangChain AI General Discussions

LangChain AI 🔥🔥🔥🔥🔥 (66 messages🔥🔥):

- Members discussed difficulties with markdown file processing and announced LangChain's integration with Bagel for secure dataset management.

- Queries were raised about customizable tagging chains, special characters handling in retrieval, and optimizing document loaders and splitters for various file types.

- Discussions also touched on ongoing talks about SRPO addressing RLHF task dependency and the LangChain AI framework.

AI Town Dev Discussions

- Convex.json config file goes missing: A user expressed difficulty locating the

convex.jsonconfig file, suggesting potential confusion or misplacement in the file structure. - Convex backend error issues: When attempting to run the Convex backend, an error occurred stating, 'Recipe convex could not be run because just could not find the shell: program not found,' hinting at a missing dependency or misconfiguration.

- Limitations in model architectures: In response to a discussion about the limitations in model architectures such as RNNs, CNNs, SSMs, and Transformers, it was clarified that these models struggle to perform real reasoning due to their structural constraints, as highlighted by Theorem 1.

- Dive into UMAP with its creator: Vincent Warmerdam shared a YouTube video titled 'Moving towards KDearestNeighbors with Leland McInnes - creator of UMAP,' delving into the nuances of UMAP, PyNNDescent, and HDBScan, and featuring insights from Leland McInnes himself.

FAQ

Q: What is Apple Intelligence and how does it differ from other MLOps models like Google Gemma and Microsoft Phi?

A: Apple Intelligence is a game-changing MLOps model introduced by Apple that reportedly surpasses Google Gemma and Microsoft Phi. It includes an on-device version with innovative quantization strategies and high-performance capabilities, focusing on practical performance rather than academic competition.

Q: What are the key features of Apple's on-device version of the MLOps model, including the tool Talaria?

A: The on-device version of Apple's MLOps model includes innovative quantization strategies and high-performance capabilities. Talaria, a key tool for on-device inference, enables quick iteration and performance tracking for various architectures.

Q: What did Andrej Karpathy cover in his YouTube video 'Let's reproduce GPT-2 (124M)'?

A: In his video, Andrej Karpathy covered building, optimizing, training, and evaluating the GPT-2 (124M) model. He explored the GPT-2 checkpoint, implemented the GPT-2 nn.Module, utilized techniques like mixed precision and flash attention, and achieved close to GPT-3 (124M) performance.

Q: What were the speculations and announcements regarding Apple's AI initiatives at WWDC?

A: Apple's WWDC lacked significant AI announcements that impressed observers. Speculations about 'Apple Intelligence' and a partnership with OpenAI were not confirmed during the event.

Q: Can you explain the importance of matrix multiplication in modern machine learning and provide a visual explanation?

A: Matrix multiplication is crucial in modern machine learning for various operations. It helps in performing tasks like feature extraction, dimensionality reduction, and neural network computations. A visual explanation can help understand how matrices are multiplied to derive meaningful results.

Q: What is the significance of Apple's Ferret-UI, and how does it contribute to iOS applications?

A: Apple's Ferret-UI is a vision-language model for iOS that analyzes icons, widgets, and text relationships. It has potential applications as an on-device AI assistant, enhancing user experience and interaction with iOS devices.

Q: What are Yann LeCun's thoughts on managing AI research labs?

A: Yann LeCun emphasizes the importance of reputable scientists in managing AI research labs. He believes in identifying talent, providing freedom and resources, setting research directions, inspiring goals, and evaluating individuals beyond traditional metrics. He also highlights the significance of fostering intellectual weirdness and accommodating creative individuals.

Q: How do reasoning abilities differ from memorization skills, and why is it important to distinguish between them?

A: Distinguishing reasoning abilities from memorization skills is crucial in understanding common sense and reasoning as separate from storing and retrieving facts. Reasoning abilities involve logical thinking and problem-solving, while memorization skills focus on retaining and recalling information. Recognizing this distinction helps in developing advanced AI systems and cognitive models.

Q: What are the key discussions and innovations in the AI community regarding AI investments, models, and advancements?

A: Discussions include concerns over the significant investment in NVIDIA GPUs since GPT-4 training in fall 2022, questioning if AI model advancements will match the investment. Additionally, there are debates about hitting a data wall potentially slowing AI progress and the industry being divided on short-term impediments versus a meaningful plateau in AI advancements.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!