[AINews] Problems with MMLU-Pro • ButtondownTwitterTwitter

Chapters

AI News - Problems with MMLU-Pro

Technology Advancements in AI Discord Recap

Exploring Recent AI Community Conversations

Detailed AI Chatroom Discussions

HuggingFace Cool Finds

HuggingFace Computer Vision Updates

LM Studio Models and Vision Capabilities

Efficient Coding with LLMs

AI Engineer World Fair Highlights

Nous Research AI ▷ world-sim

Mojo and Eleuther Discussions

LangChain AI: General Discussion

LlamaIndex Blog

Multi-Document Financial Analyst Agent, RAG Retrieval Evaluations, and AI Application Mentorship Requests

Discussions on Various AI Topics

AI News - Problems with MMLU-Pro

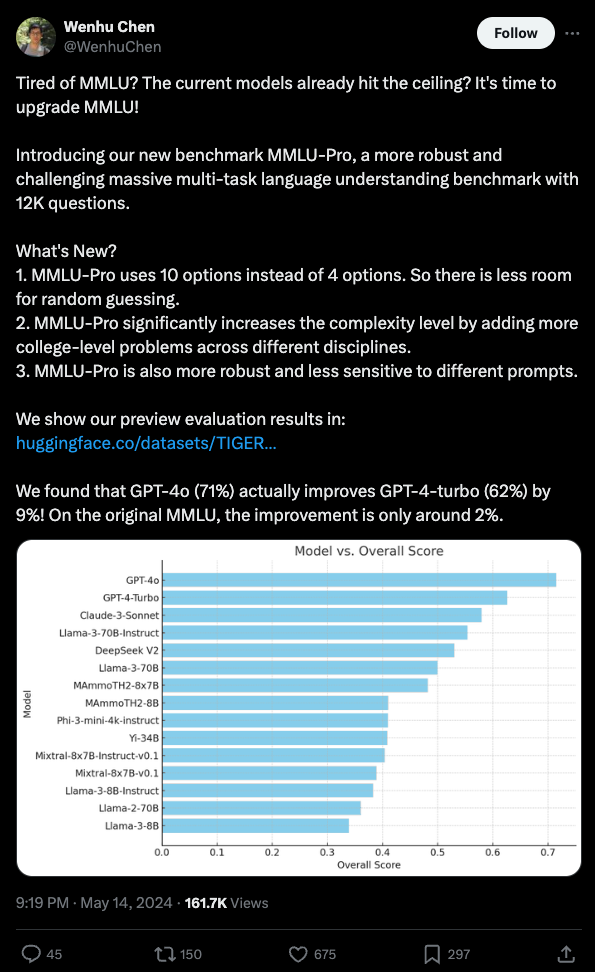

The article discusses the issues surrounding MMLU-Pro and its predecessor MMLU. It highlights discrepancies in model evaluation, sampling parameters, system prompts, and answer extraction regex. Despite improvements, there are concerns about the treatment of closed and open models. The MMLU-Pro team acknowledges the discrepancies but minimizes their impact. Prompt engineering can significantly impact performance, and there are hopes for better control over sources of variance in benchmarks.

Technology Advancements in AI Discord Recap

This section discusses various advancements in technology related to AI models, training costs, lifespan extension in mice, audio generation from video, model releases, and benchmarks. It covers topics such as the rapid increase in AI model training costs, a breakthrough in extending the lifespan of mice, DeepMind's AI generating audio from video, benchmarking various AI models, and concerns over AI leaderboards' validity. Additionally, it highlights discussions on lessons learned in building AI applications, the potential for training larger models on supercomputers, and humorous memes related to AI development.

Exploring Recent AI Community Conversations

- Hidden Talents of Qwen Revealed: Community members praised the Qwen Team's efforts, emphasizing their underappreciated contribution such as a new training video.

- GPU Showdown: AMD vs NVIDIA: A debate highlighted NVIDIA's dominance over AMD GPUs due to software ecosystem and energy efficiency, despite AMD's advancements.

- Phi-3 Training Troubles with Alpaca: Solutions were shared for an error during Phi-3 training with Alpaca dataset, mentioning the lack of CUDA support and suggesting updates.

- Kaggle Constraints Provoke Innovation: Discussion focused on overcoming disk space constraints on Kaggle platform, highlighting the importance of reliable checkpoints.

- Empowering Job Seekers in AI Space: Proposals were made for a dedicated job channel in the AI community, reflecting the need for career-focused services.

- Encapsulating Complexity with LLM APIs: Rearchitecting coding structures with LLM-style APIs to streamline complex tasks and save time.

- Exploring Governmental AI Scrutiny: Curiosity around the UK Government's AI framework targeting large language models, aiming at scrutinizing and regulating AI technologies.

- Podcast Episode Storms Hacker News: A podcast episode shared on Hacker News attracted engagement and upvotes, reflecting an active online discourse.

- Fortnite Revamps Fun Factor: Fortnite's updates without crossovers sparked discussions and reactions on platforms like Polygon.

- Merging AI Minds: Enthusiasm around AI Engineer World Fair's deep dives into model merging strategies, along with debates on automated merging strategy determination.

- CUDA Credentials Clash: Debate on the value of CUDA certification versus public work for hiring, with a consensus favoring demonstrable skills.

- Compiling The Path Forward: Compiler enthusiasts sought by Lightning AI for opportunities to boost PyTorch models using Thunder project's compiler.

- PyTorch Profilers Peek at Performance: Discussion on the importance of torch.compile manual and other alternatives for optimization.

- The Quantum of Sparsity: Discussions on sparsity pattern optimization using cusparseLT and CUTLASS libraries for efficient sparse matrix multiplication.

- LLM Lessons Laid Out: Ideation for an LLM101n course to guide users from basics to state-of-the-art model practices.

- Critique Companions Boost AI Reward Models: Exploring synthetic critiques from large language models to enhance preference learning.

- Test-Time-Training Layers Break RNN Constraints: Introduction of TTT layers that replace RNN hidden states with ML models for linear complexity architectures.

- Data Dialogue with Dataline: Launch of Dataline platform for querying multiple databases via AI interface and a study on LLM reasoning capacities.

- GPT-4 Benchmark Fever: Observations on GPT-4's improved performance on benchmarks, with a focus on in-context examples and BitNet architecture.

- RAG and Reality: Hallucinations Under the Lens: Video highlighting LegalTech tools' reliability and proposals for citation consistency.

- Trading on Autopilot: LlamaIndex Drives AI Stock Assistant: An AI trading assistant utilizing Llama Index agent, enhancing trading strategies.

- JPEG XL Takes the Crown: Recognition of JPEG XL as a leading image codec, emphasizing its efficiency over other formats.

- Kolors Repository Gains Attention: Surge of interest in Kolors GitHub repository and predictions of its impact.

- Noise Scheduling Sparks Debate: Debate on the effectiveness of noise scheduling for zero terminal SNR and referencing SDXL's paper.

- Meta's VLM Ads Face Scrutiny: Concerns raised over Meta's advertising of VLM rather than releasing Llama3VLM.

- VALL-E 2's Text-to-Speech Breakthrough: VALL-E 2's advancements in text-to-speech systems with zero-shot TTS capabilities.

- Parsing CSV through LangChain: Exploring modern approaches for handling CSV files in LangChain beyond previous constraints.

- Async Configurations Unraveled: Demystification of async configurations in LangChain for better task management.

- Local LLM Experimentation Scales Up: Discussions on running LLM models like phi3 on personal rigs with NVIDIA RTX 4090 GPUs.

- LangGraph Cloud Service Stirs Speculation: Anticipation of LangGraph Cloud's arrival and discussions on deployment paradigms.

- In-browser Video Analysis Tool Intrigues: Interest in 'doesVideoContain', a tool for in-browser content scanning within videos.

- RAG's Skills Library Sharpens Actions: Integration of a skills library with RAG for enhancing specified actions and its potential in diverse AI applications.

Detailed AI Chatroom Discussions

Various AI-related topics were discussed in several Discord channels related to different AI projects. Users discussed the capabilities of different models, encountered issues with image generation performance, and speculated about AI model integration. Furthermore, there were talks about cost-effective asset collection strategies and the future of vectorization in Python. These conversations reflect the dynamic and evolving nature of AI research and development in these online communities.

HuggingFace Cool Finds

Browse with in10search Tabs Sidepanel AI:

A new browser sidepanel extension called in10search Tabs Sidepanel AI integrates horizontal tabs and ChatGPT. More details can be found on GitHub.

ZeroGPU HuggingFace Space for Stable Diffusion Models:

A member introduced a HuggingFace Space that allows users to compare multiple Stable Diffusion Models like SD3 Medium, SD2.1, SDXL, and more. Check it out here.

qdurllm: Local Search Engine with Qdrant & LLMs:

The newly launched open-source product qdurllm combines Qdrant, URL scraping, and Large Language Models for local search and chat. Explore further on its GitHub repository.

AI on-call developer: merlinn:

An AI on-call developer named merlinn helps investigate production incidents by providing contextual information. Check it out and provide feedback on GitHub.

gary4live Ableton plug-in:

A fun plug-in called gary4live for Ableton was released on Gumroad. It's a max4live device that integrates playful workflows with AI, available for free here.

HuggingFace Computer Vision Updates

The HuggingFace Discord channel for computer vision provides updates on various models and tools related to object detection and segmentation. Some highlights include Torchmetrics being recommended for object detection metrics, the release of the RT-DETR model for real-time object detection, the CogVLM2 model for vision-language tasks, support for zero-shot object detection models in the Transformers library, and the extension of MaskFormer models for instance segmentation tasks. The section also includes links to the mentioned tools and models for further information.

LM Studio Models and Vision Capabilities

Issues and Discussions

- Memory Constraints on M2 MacBook: Model failed to load on M2 MacBook with 32k context, planned for a retry on M3 MacBook.

- Compatibility of InternLM Models for Vision Tasks: Some models worked well in Python but faced compatibility issues in LM Studio for vision tasks.

- Support for GLM4 Models in llama.cpp: User inquired about LM Studio's support for GLM4 models following llama.cpp's addition of support, aiming for efficient CodeGeex model runs.

Links Mentioned:

Efficient Coding with LLMs

A user discussed how rearchitecting code to use LLM-style APIs simplifies complex coding tasks, emphasizing the human role in communicating and integrating systems. They contended that gluing APIs together can turn time-consuming tasks into straightforward, zeroshot LLM prompts, saving effort in the long run.

AI Engineer World Fair Highlights

Key Highlights:

- The AI Engineer World Fair featured notable talks including Justine Tunney's keynote, a highly-praised AI leadership workshop, and interesting discussions on LLMs and model merging.

- Despite some logistics issues, the conference was well-received with diverse, high-energy sessions on topics like AI-generated music and Tool Use with Open-Source LLMs.

- Members discussed the differences between LlamaFile and Ollama, emphasizing portability, optimization, and compatibility with a large number of models.

- A suggestion was made for an adapter to combine the strengths of both tools.

- Model merging techniques were a hot topic, with discussions on using deep learning models to converge on the best strategy.

- Concerns about the intellectual validity of this approach were noted.

- Concerns were raised about wearable devices recording off-mic moments without user consent, and a proposed solution involved desktop integration and notification features.

- Discussions on future AI Engineer World Fair planning included ideas for extended event duration and incorporating dedicated tracks for specific applications like AI girlfriend apps and gamification of conference schedules.

Nous Research AI ▷ world-sim

WorldSIM simulation success

- Discussions on the success of the WorldSIM simulation and its implications.

Next era of simulation

- Exploration of the next era of simulation and its potential advancements.

Mojo and Eleuther Discussions

WorldSIM Buddha sim achieves enlightenment swiftly:

- User shared experience of a simulation based on Buddhist principles evolving into a single enlightened population in under 30K steps.

- Credits exhausted in a single lunch hour due to the simulation.

Anticipation builds for next era of simulation:

- Resources directed towards next era of simulation teased.

- Excitement and curiosity sparked in the channel.

Modular (Mojo 🔥) general:

- Various discussions on WSL2 update troubles, Python dependency conflicts, rounding bugs in Mojo, 'Mojician' badge criteria, and unexpected behavior of Int64 and Float64 types.

Modular (Mojo 🔥) mojos:

- Topics included del method, Mojo and 3D graphics, availability of libc functions, lack of cross-compilation support, and usage of partition method.

Eleuther announcements:

- Top-k Sparse Autoencoders released for Llama 3 8B.

- Automated pipeline for SAE features and training efforts for the 70B model underway.

Eleuther general:

- Struggle to find a PDF markup tool, training ViT with IJEPA, evaluating LlaVa LLM, randomness of Copilot in name selection, and determinism in Copilot completions discussed.

Eleuther research:

- T-FREE tokenizer, model expansion efficiency, BitNet Transformer, gradient conflicts in diffusion models, and quantization in inference research findings detailed.

LangChain AI: General Discussion

CSV File Handling in LangChain: A user seeks advice on modern approaches to handling CSV files with LangChain, aiming to overcome previous limitations.

Async Configuration in LangChain: A user inquired about using the ensure_config() method in an asynchronous setting within LangChain, looking for guidance on retrieving thread_id in a ToolNode using astream_events.

LangGraph ToolNode Errors: Errors with the ToolNode in create_react_agent from langgraph.prebuilt reported by a user, resulting in a NameError: name 'Type' is not defined, seeking troubleshooting assistance.

Running LLMs on Local Machines: Discussions on running smaller LLM models like phi3, mistral, and llama3 on local PCs with NVIDIA GPUs, along with considerations for running larger-scale models with 70B parameters.

LangChain Utility Functions: A user seeks help in converting model responses to JSON format within LangChain, guided towards utilizing JsonOutputParser and integrating with Pydantic for resolution.

LlamaIndex Blog

Agentic RAG for Stock Trading 📈🤖:

A tutorial video shows how to build an AI-enabled trading assistant powered by Llama Index agent/tool/RAG abstractions. The assistant can perform various tasks for stock trading as demonstrated in the video tutorial.

Toolkits for RAG Dataset Generation:

Creating an evaluation dataset for RAG is challenging, but Giskard AI offers a toolkit for generating diverse question sets. This toolkit covers a broader range of questions compared to most automatic dataset generators, as discussed in their article.

Agents as Microservices:

Llama-agents enable the setup of both agent services and tool services as microservices capable of handling large volumes of requests, as explained in this post.

Multi-Document Financial Analyst Agent, RAG Retrieval Evaluations, and AI Application Mentorship Requests

-

Multi-Document Financial Analyst Agent: Analyzing categorized documents like 10K reports can be done efficiently using a Multi-Document Financial Analyst Agent. Pavan Mantha showcases the use of Llama Index's features for this purpose.

-

Importance of RAG Retrieval Evaluations: Retrieval evaluations in RAG are crucial for system effectiveness and accuracy. Identifying metrics and dataset representation are key steps elaborated in an article by Ross A.

-

Request for AI Application Mentorship: A member seeks mentorship for building an AI application, looking for guidance while handling the execution. Suggestions include starting with a 5-line code starter example from Llama Index's documentation.

Discussions on Various AI Topics

This section covers discussions on various AI-related topics including RAG, early AI boom hype, retrieval and cost efficiency in enterprises, Buzz excitement, FPGA meeting, Calendly scheduling, Google image searches for sprites, purchased assets for tilesets, Gemma 2 9B language model, serverless AI inference with Gemma 2, and experiments with base models in AI research.

FAQ

Q: What are some advancements in technology related to AI models mentioned in the essai?

A: Advancements in technology related to AI models mentioned in the essai include the rapid increase in AI model training costs, breakthroughs in extending the lifespan of mice, DeepMind's AI generating audio from video, benchmarking various AI models, and concerns over the validity of AI leaderboards.

Q: What were some key highlights discussed at the AI Engineer World Fair?

A: Key highlights discussed at the AI Engineer World Fair included Justine Tunney's keynote, a highly-praised AI leadership workshop, discussions on large language models (LLMs) and model merging, and diverse sessions on AI-related topics such as AI-generated music and Tool Use with Open-Source LLMs.

Q: What are some noteworthy topics discussed in the section related to AI-related Discord channels?

A: Some noteworthy topics discussed in the section related to AI-related Discord channels include the capabilities of different AI models, issues with image generation performance, and speculations about AI model integration. The conversations reflect the dynamic and evolving nature of AI research and development in online communities.

Q: What are some specific discussions related to CSV file handling and async configurations in LangChain?

A: Specific discussions related to CSV file handling in LangChain include seeking advice on modern approaches to overcome previous constraints, while async configuration inquiries focus on using the `ensure_config()` method in an asynchronous setting within LangChain for better task management.

Q: What were the key announcements and discussions related to Eleuther mentioned in the essai?

A: Key announcements and discussions related to Eleuther included the release of Top-k Sparse Autoencoders for Llama 3 8B, efforts towards a 70B model, struggles to find a PDF markup tool, training ViT with IJEPA, and discussions on model evaluation, research findings, and tokenizer efficiency.

Q: Can you provide an overview of discussions related to RAG datasets and agents in the essai?

A: Discussions related to RAG datasets and agents mentioned in the essai include challenges in creating evaluation datasets for RAG addressed by Giskard AI's toolkit, setting up Llama-agents as microservices for handling requests, and showcasing the use of Llama Index's features for tasks like analyzing categorized documents using a Multi-Document Financial Analyst Agent.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!